You work for a company that manages highly sensitive user data. You are designing the Google Kubernetes Engine (GKE) infrastructure for your company, including several applications that will be deployed in development and production environments. Your design must protect data from unauthorized access from other applications while minimizing the amount of management overhead required. What should you do?

Your organization stores all application logs from multiple Google Cloud projects in a central Cloud Logging project. Your security team wants to enforce a rule that each project team can only view their respective logs, and only the operations team can view all the logs. You need to design a solution that meets the security team's requirements, while minimizing costs. What should you do?

You are performing a semi-annual capacity planning exercise for your flagship service You expect a service user growth rate of 10% month-over-month for the next six months Your service is fully containerized and runs on a Google Kubemetes Engine (GKE) standard cluster across three zones with cluster autoscaling enabled You currently consume about 30% of your total deployed CPU capacity and you require resilience against the failure of a zone. You want to ensure that your users experience minimal negative impact as a result of this growth o' as a result of zone failure while you avoid unnecessary costs How should you prepare to handle the predicted growth?

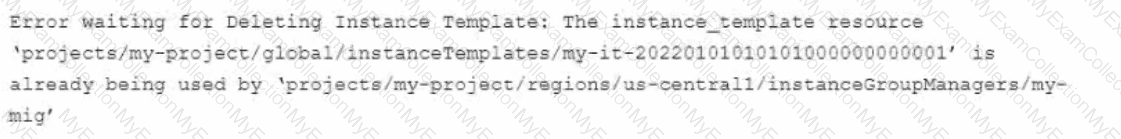

You use Terraform to manage an application deployed to a Google Cloud environment The application runs on instances deployed by a managed instance group The Terraform code is deployed by using aCI/CD pipeline When you change the machine type on the instance template used by the managed instance group, the pipeline fails at the terraform apply stage with the following error message

You need to update the instance template and minimize disruption to the application and the number of pipeline runs What should you do?

You need to define SLOs for a high-traffic web application. Customers are currently happy with the application performance and availability. Based on current measurement, the 90th percentile Of latency is 160 ms and the 95th

percentile of latency is 300 ms over a 28-day window. What latency SLO should you publish?

Your company runs services by using multiple globally distributed Google Kubernetes Engine (GKE) clusters Your operations team has set up workload monitoring that uses Prometheus-based tooling for metrics alerts: and generating dashboards This setup does not provide a method to view metrics globally across all clusters You need to implement a scalable solution to support global Prometheus querying and minimize management overhead What should you do?

Your team has an application built by using a Dockerfile. The build is executed from Cloud Build, and the resulting artifacts are stored in Artifact Registry. Your team is reporting that builds are slow. You need to increase build speed, while following Google-recommended practices. What should you do?

Your company recently migrated to Google Cloud. You need to design a fast, reliable, and repeatable solution for your company to provision new projects and basic resources in Google Cloud. What should you do?

You are running an application on Compute Engine and collecting logs through Stackdriver. You discover that some personally identifiable information (PII) is leaking into certain log entry fields. You want to prevent these fields from being written in new log entries as quickly as possible. What should you do?

Your company experiences bugs, outages, and slowness in its production systems. Developers use the production environment for new feature development and bug fixes. Configuration and experiments are done in the production environment, causing outages for users. Testers use the production environmentfor load testing, which often slows the production systems. You need to redesign the environment to reduce the number of bugs and outages in production and to enable testers to load test new features. What should you do?

You manage several production systems that run on Compute Engine in the same Google Cloud Platform (GCP) project. Each system has its own set of dedicated Compute Engine instances. You want to know how must it costs to run each of the systems. What should you do?

You support a stateless web-based API that is deployed on a single Compute Engine instance in the europe-west2-a zone . The Service Level Indicator (SLI) for service availability is below the specified Service Level Objective (SLO). A postmortem has revealed that requests to the API regularly time out. The time outs are due to the API having a high number of requests and running out memory. You want to improve service availability. What should you do?

Your company stores a large volume of infrequently used data in Cloud Storage. The projects in your company's CustomerService folder access Cloud Storage frequently, but store very little data. You want to enable Data Access audit logging across the company to identify data usage patterns. You need to exclude the CustomerService folder projects from Data Access audit logging. What should you do?

Your team uses Cloud Build for all CI/CO pipelines. You want to use the kubectl builder for Cloud Build to deploy new images to Google Kubernetes Engine (GKE). You need to authenticate to GKE while minimizing development effort. What should you do?

You are creating a CI/CD pipeline to perform Terraform deployments of Google Cloud resources Your CI/CD tooling is running in Google Kubernetes Engine (GKE) and uses an ephemeral Pod for each pipeline run You must ensure that the pipelines that run in the Pods have the appropriate Identity and Access Management (1AM) permissions to perform the Terraform deployments You want to follow Google-recommended practices for identity management What should you do?

Choose 2 answers

Your Cloud Run application writes unstructured logs as text strings to Cloud Logging. You want to convert the unstructured logs to JSON-based structured logs. What should you do?

You are using Terraform to manage infrastructure as code within a Cl/CD pipeline You notice that multiple copies of the entire infrastructure stack exist in your Google Cloud project, and a new copy is created each time a change to the existing infrastructure is made You need to optimize your cloud spend by ensuring that only a single instance of your infrastructure stack exists at a time. You want to follow Google-recommended practices What should you do?

Your company runs an e-commerce business. The application responsible for payment processing has structured JSON logging with the following schema:

Capture and access of logs from the payment processing application is mandatory for operations, but the jsonPayload.user_email field contains personally identifiable information (PII). Your security team does not want the entire engineering team to have access to PII. You need to stop exposing PII to the engineering team and restrict access to security team members only. What should you do?

You work for a global organization and are running a monolithic application on Compute Engine You need to select the machine type for the application to use that optimizes CPU utilization by using the fewest number of steps You want to use historical system metncs to identify the machine type for the application to use You want to follow Google-recommended practices What should you do?

You are running a web application that connects to an AlloyDB cluster by using a private IP address in your default VPC. You need to run a database schema migration in your CI/CD pipeline by using Cloud Build before deploying a new version of your application. You want to follow Google-recommended security practices. What should you do? Â

You are developing a Node.js utility on a workstation in Cloud Workstations by using Code OSS. The utility is a simple web page, and you have already confirmed that all necessary firewall rules are in place. You tested the application by starting it on port 3000 on your workstation in Cloud Workstations, but you need to be able to access the web page from your local machine. You need to follow Google-recommended security practices. What should you do?

You built a serverless application by using Cloud Run and deployed the application to your production environment You want to identify the resource utilization of the application for cost optimization What should you do?

You are responsible for the reliability of a high-volume enterprise application. A large number of users report that an important subset of the application’s functionality – a data intensive reporting feature – is consistently failing with an HTTP 500 error. When you investigate your application’s dashboards, you notice a strong correlation between the failures and a metric that represents the size of an internal queue used for generating reports. You trace the failures to a reporting backend that is experiencing high I/O wait times. You quickly fix the issue by resizing the backend’s persistent disk (PD). How you need to create an availability Service Level Indicator (SLI) for the report generation feature. How would you define it?

Your team is designing a new application for deployment into Google Kubernetes Engine (GKE). You need to set up monitoring to collect and aggregate various application-level metrics in a centralized location. You want to use Google Cloud Platform services while minimizing the amount of work required to set up monitoring. What should you do?

You have migrated an e-commerce application to Google Cloud Platform (GCP). You want to prepare the application for the upcoming busy season. What should you do first to prepare for the busy season?

You are monitoring a service that uses n2-standard-2 Compute Engine instances that serve large files. Users have reported that downloads are slow. Your Cloud Monitoring dashboard shows that your VMS are running at peak network throughput. You want to improve the network throughput performance. What should you do?

Your company is creating a new cloud-native Google Cloud organization. You expect this Google Cloud organization to first be used by a small number of departments and then expand to be used by a large number of departments. Each department has a large number of applications varying in size. You need to design the VPC network architecture. Your solution must minimize the amount of management required while remaining flexible enough for development teams to quickly adapt to their evolving needs. What should you do?

You support a production service that runs on a single Compute Engine instance. You regularly need to spend time on recreating the service by deleting the crashing instance and creating a new instance based on the relevant image. You want to reduce the time spent performing manual operations while following Site Reliability Engineering principles. What should you do?

You need to run a business-critical workload on a fixed set of Compute Engine instances for several months. The workload is stable with the exact amount of resources allocated to it. You want to lower the costs for this workload without any performance implications. What should you do?

Your company's security team needs to have read-only access to Data Access audit logs in the _Required bucket You want to provide your security team with the necessary permissions following the principle of least privilege and Google-recommended practices. What should you do?

Your organization wants to increase the availability target of an application from 99 9% to 99 99% for an investment of $2 000 The application's current revenue is S1,000,000 You need to determine whether the increase in availability is worth the investment for a single year of usage What should you do?

You are on-call for an infrastructure service that has a large number of dependent systems. You receive an alert indicating that the service is failing to serve most of its requests and all of its dependent systems with hundreds of thousands of users are affected. As part of your Site Reliability Engineering (SRE) incident management protocol, you declare yourself Incident Commander (IC) and pull in two experienced people from your team as Operations Lead (OLJ and Communications Lead (CL). What should you do next?

You recently configured an App Hub application. You are able to see the managed instance group, backend service, and URL map listed in App Hub, but you do not see the forwarding rule. You must ensure that the forwarding rule is listed. What should you do?

You are responsible for creating and modifying the Terraform templates that define your Infrastructure. Because two new engineers will also be working on the same code, you need to define a process and adopt a tool that will prevent you from overwriting each other's code. You also want to ensure that you capture all updates in the latest version. What should you do?

Your company is migrating its production systems to Google Cloud. You need to implement site reliability engineering (SRE) practices during the migration to minimize customer impact from potential future incidents. Which two SRE practices should you implement?

Choose 2 answers

Your team deploys applications to three Google Kubernetes Engine (GKE) environments development staging and production You use GitHub reposrtones as your source of truth You need to ensure that the three environments are consistent You want to follow Google-recommended practices to enforce and install network policies and a logging DaemonSet on all the GKE clusters in those environments What should you do?

Some of your production services are running in Google Kubernetes Engine (GKE) in the eu-west-1 region. Your build system runs in the us-west-1 region. You want to push the container images from your build system to a scalable registry to maximize the bandwidth for transferring the images to the cluster. What should you do?

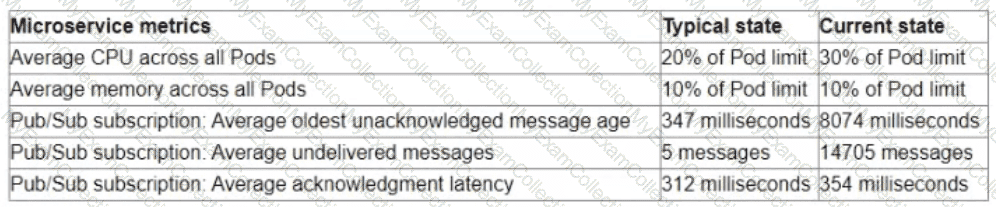

You are the on-call Site Reliability Engineer for a microservice that is deployed to a Google Kubernetes Engine (GKE) Autopilot cluster. Your company runs an online store that publishes order messages to Pub/Sub and a microservice receives these messages and updates stock information in the warehousing system. A sales event caused an increase in orders, and the stock information is not being updated quickly enough. This is causing a large number of orders to be accepted for products that are out of stock You check the metrics for the microservice and compare them to typical levels.

You need to ensure that the warehouse system accurately reflects product inventory at the time orders are placed and minimize the impact on customers What should you do?

You have deployed a fleet Of Compute Engine instances in Google Cloud. You need to ensure that monitoring metrics and logs for the instances are visible in Cloud Logging and Cloud Monitoring by your company's operations and cyber

security teams. You need to grant the required roles for the Compute Engine service account by using Identity and Access Management (IAM) while following the principle of least privilege. What should you do?

You are writing a postmortem for an incident that severely affected users. You want to prevent similar incidents in the future. Which two of the following sections should you include in the postmortem? (Choose two.)

You are the Operations Lead for an ongoing incident with one of your services. The service usually runs at around 70% capacity. You notice that one node is returning 5xx errors for all requests. There has also been a noticeable increase in support cases from customers. You need to remove the offending node from the load balancer pool so that you can isolate and investigate the node. You want to follow Google-recommended practices to manage the incident and reduce the impact on users. What should you do?

Your organization wants to collect system logs that will be used to generate dashboards in Cloud Operations for their Google Cloud project. You need to configure all current and future Compute Engine instances to collect the system logs and you must ensure that the Ops Agent remains up to date. What should you do?

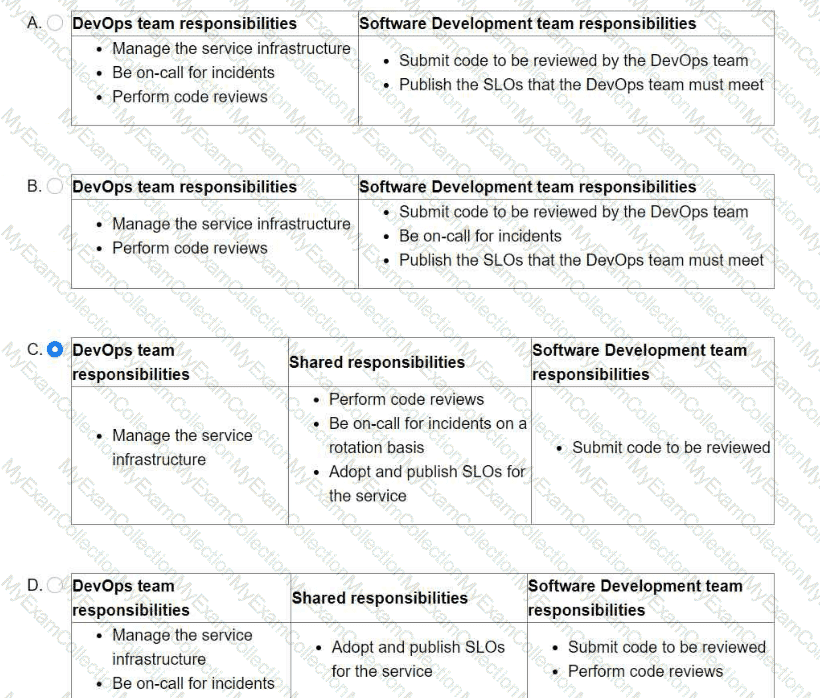

You are leading a DevOps project for your organization. The DevOps team is responsible for managing the service infrastructure and being on-call for incidents. The Software Development team is responsible for writing, submitting, and reviewing code. Neither team has any published SLOs. You want to design a new joint-ownership model for a service between the DevOps team and the Software Development team. Which responsibilities should be assigned to each team in the new joint-ownership model?

You have a pool of application servers running on Compute Engine. You need to provide a secure solution that requires the least amount of configuration and allows developers to easily access application logs for troubleshooting. How would you implement the solution on GCP?

You use Spinnaker to deploy your application and have created a canary deployment stage in the pipeline. Your application has an in-memory cache that loads objects at start time. You want to automate the comparison of the canary version against the production version. How should you configure the canary analysis?

Your application’s performance in Google Cloud has degraded since the last release. You suspect that downstream dependencies might be causing some requests to take longer to complete. You need to investigate the issue with your application to determine the cause. What should you do?

You are developing a strategy for monitoring your Google Cloud Platform (GCP) projects in production using Stackdriver Workspaces. One of the requirements is to be able to quickly identify and react to production environment issues without false alerts from development and staging projects. You want to ensure that you adhere to the principle of least privilege when providing relevant team members with access to Stackdriver Workspaces. What should you do?

Your company runs applications in Google Kubernetes Engine (GKE). Several applications rely on ephemeral volumes. You noticed some applications were unstable due to the DiskPressure node condition on the worker nodes. You need

to identify which Pods are causing the issue, but you do not have execute access to workloads and nodes. What should you do?

You are using Stackdriver to monitor applications hosted on Google Cloud Platform (GCP). You recently deployed a new application, but its logs are not appearing on the Stackdriver dashboard.

You need to troubleshoot the issue. What should you do?

You are responsible for the reliability of a custom-built, distributed file storage service that your company uses internally. This service handles thousands of file uploads and downloads daily. You need to define a service level indicator (SLI) to measure the reliability of your service usage and configure alerts to be notified of potential issues. Which SLI should you use to measure the reliability of the service?

The new version of your containerized application has been tested and is ready to be deployed to production on Google Kubernetes Engine (GKE) You could not fully load-test the new version in your pre-production environment and you need to ensure that the application does not have performance problems after deployment Your deployment must be automated What should you do?

You are configuring your CI/CD pipeline natively on Google Cloud. You want builds in a pre-production Google Kubernetes Engine (GKE) environment to be automatically load-tested before being promoted to the production GKE environment. You need to ensure that only builds that have passed this test are deployed to production. You want to follow Google-recommended practices. How should you configure this pipeline with Binary Authorization?

You support a user-facing web application When analyzing the application's error budget over the previous six months you notice that the application never consumed more than 5% of its error budget You hold a SLO review with business stakeholders and confirm that the SLO is set appropriately You want your application's reliability to more closely reflect its SLO What steps can you take to further that goal while balancing velocity, reliability, and business needs?

Choose 2 answers

You are leading a DevOps project for your organization. The DevOps team is responsible for managing the service infrastructure and being on-call for incidents. The Software Development team is responsible for writing, submitting, and reviewing code. Neither team has any published SLOs. You want to design a new joint-ownership model for a service between the DevOps team and the Software Development team. Which responsibilities should be assigned to each team in the new joint-ownership model?

Your company runs services by using Google Kubernetes Engine (GKE). The GKE clusters in the development environment run applications with verbose logging enabled. Developers view logs by using the kubect1 logs

command and do not use Cloud Logging. Applications do not have a uniform logging structure defined. You need to minimize the costs associated with application logging while still collecting GKE operational logs. What should you do?