You must provide tunneling in the overlay that supports multipath capabilities.

Which two protocols provide this function? (Choose two.)

MPLSoGRE

VXLAN

VPN

MPLSoUDP

Answer:

Explanation:

In cloud networking, overlay networks are used to create virtualized networks that abstract the underlying physical infrastructure. To supportmultipath capabilities, certain protocols provide efficient tunneling mechanisms. Let’s analyze each option:

A. MPLSoGRE

Incorrect:MPLS over GRE (MPLSoGRE) is a tunneling protocol that encapsulates MPLS packets within GRE tunnels. While it supports MPLS traffic, it does not inherently provide multipath capabilities.

B. VXLAN

Correct:VXLAN (Virtual Extensible LAN) is an overlay protocol that encapsulates Layer 2 Ethernet frames within UDP packets. It supports multipath capabilities by leveraging the Equal-Cost Multi-Path (ECMP) routing in the underlay network. VXLAN is widely used in cloud environments for extending Layer 2 networks across data centers.

C. VPN

Incorrect:Virtual Private Networks (VPNs) are used to securely connect remote networks or users over public networks. They do not inherently provide multipath capabilities or overlay tunneling for virtual networks.

D. MPLSoUDP

Correct:MPLS over UDP (MPLSoUDP) is a tunneling protocol that encapsulates MPLS packets within UDP packets. Like VXLAN, it supports multipath capabilities by utilizing ECMP in the underlay network. MPLSoUDP is often used in service provider environments for scalable and flexible network architectures.

Why These Protocols?

VXLAN:Provides Layer 2 extension and supports multipath forwarding, making it ideal for large-scale cloud deployments.

MPLSoUDP:Combines the benefits of MPLS with UDP encapsulation, enabling efficient multipath routing in overlay networks.

JNCIA Cloud References:

The JNCIA-Cloud certification covers overlay networking protocols like VXLAN and MPLSoUDP as part of its curriculum on cloud architectures. Understanding these protocols is essential for designing scalable and resilient virtual networks.

For example, Juniper Contrail uses VXLAN to extend virtual networks across distributed environments, ensuring seamless communication and high availability.

Which two statements are correct about Network Functions Virtualization (NFV)? (Choose two.)

the NFV framework explains how VNFs fits into the whole solution.

The NFV Infrastructure (NFVI) is a component of NFV.

The NFV Infrastructure (NFVI) is not a component of NFV.

The NFV framework is defined by the W3C.

Answer:

Explanation:

Network Functions Virtualization (NFV) is a framework designed to virtualize network services traditionally run on proprietary hardware. It decouples network functions from dedicated hardware appliances and implements them as software running on standard servers or virtual machines. Let’s analyze each statement:

A. The NFV framework explains how VNFs fit into the whole solution.

Correct:The NFV framework provides a structured approach to deploying and managing Virtualized Network Functions (VNFs). It defines how VNFs interact with other components, such as the NFV Infrastructure (NFVI), Management and Orchestration (MANO), and the underlying hardware.

B. The NFV Infrastructure (NFVI) is a component of NFV.

Correct:The NFV Infrastructure (NFVI) is a critical part of the NFV architecture. It includes the physical and virtual resources (e.g., compute, storage, networking) that host and support VNFs. NFVI acts as the foundation for deploying and running virtualized network functions.

C. The NFV Infrastructure (NFVI) is not a component of NFV.

Incorrect:This statement contradicts the NFV architecture. NFVI is indeed a core component of NFV, providing the necessary infrastructure for VNFs.

D. The NFV framework is defined by the W3C.

Incorrect:The NFV framework is defined by the European Telecommunications Standards Institute (ETSI), not the W3C. ETSI’s NFV Industry Specification Group (ISG) established the standards and architecture for NFV.

Why These Answers?

Framework Explanation:The NFV framework provides a comprehensive view of how VNFs integrate into the overall solution, ensuring scalability and flexibility.

NFVI Role:NFVI is essential for hosting and supporting VNFs, making it a fundamental part of the NFV architecture.

JNCIA Cloud References:

The JNCIA-Cloud certification covers NFV as part of its cloud infrastructure curriculum. Understanding the NFV framework and its components is crucial for deploying and managing virtualized network functions in cloud environments.

For example, Juniper Contrail integrates with NFV frameworks to deploy and manage VNFs, enabling service providers to deliver network services efficiently and cost-effectively.

Your e-commerce application is deployed on a public cloud. As compared to the rest of the year, it receives substantial traffic during the Christmas season.

In this scenario, which cloud computing feature automatically increases or decreases the resource based on the demand?

resource pooling

on-demand self-service

rapid elasticity

broad network access

Answer:

Explanation:

Cloud computing provides several key characteristics that enable flexible and scalable resource management. Let’s analyze each option:

A. resource pooling

Incorrect: Resource pooling refers to the sharing of computing resources (e.g., storage, processing power) among multiple users or tenants. While important, it does not directly address the automatic scaling of resources based on demand.

B. on-demand self-service

Incorrect: On-demand self-service allows users to provision resources (e.g., virtual machines, storage) without requiring human intervention. While this is a fundamental feature of cloud computing, it does not describe the ability to automatically scale resources.

C. rapid elasticity

Correct: Rapid elasticity is the ability of a cloud environment to dynamically increase or decrease resources based on demand. This ensures that applications can scale up during peak traffic periods (e.g., Christmas season) and scale down during low-demand periods, optimizing cost and performance.

D. broad network access

Incorrect: Broad network access refers to the ability to access cloud services over the internet from various devices. While essential for accessibility, it does not describe the scaling of resources.

Why Rapid Elasticity?

Dynamic Scaling: Rapid elasticity ensures that resources are provisioned or de-provisioned automatically to meet changing workload demands.

Cost Efficiency: By scaling resources only when needed, organizations can optimize costs while maintaining performance.

JNCIA Cloud References:

The JNCIA-Cloud certification emphasizes the key characteristics of cloud computing, including rapid elasticity. Understanding this concept is essential for designing scalable and cost-effective cloud architectures.

For example, Juniper Contrail supports cloud elasticity by enabling dynamic provisioning of network resources in response to changing demands.

Which OpenStack service displays server details of the compute node?

Keystone

Neutron

Cinder

Nova

Answer:

Explanation:

OpenStack provides various services to manage cloud infrastructure resources, including compute nodes and virtual machines (VMs). Let’s analyze each option:

A. Keystone

Incorrect: Keystoneis the OpenStack identity service responsible for authentication and authorization. It does not display server details of compute nodes.

B. Neutron

Incorrect: Neutronis the OpenStack networking service that manages virtual networks, routers, and IP addresses. It is unrelated to displaying server details of compute nodes.

C. Cinder

Incorrect: Cinderis the OpenStack block storage service that provides persistent storage volumes for VMs. It does not display server details of compute nodes.

D. Nova

Correct: Novais the OpenStack compute service responsible for managing the lifecycle of virtual machines, including provisioning, scheduling, and monitoring. It also provides detailed information about compute nodes and VMs, such as CPU, memory, and disk usage.

Why Nova?

Compute Node Management:Nova manages compute nodes and provides APIs to retrieve server details, including resource utilization and VM status.

Integration with CLI/REST APIs:Commands likeopenstack server showornova hypervisor-showcan be used to display compute node and VM details.

JNCIA Cloud References:

The JNCIA-Cloud certification covers OpenStack services, including Nova, as part of its cloud infrastructure curriculum. Understanding Nova’s role in managing compute resources is essential for operating OpenStack environments.

For example, Juniper Contrail integrates with OpenStack Nova to provide advanced networking and security features for compute nodes and VMs.

Which command would you use to see which VMs are running on your KVM device?

virt-install

virsh net-list

virsh list

VBoxManage list runningvms

Answer:

Explanation:

KVM (Kernel-based Virtual Machine) is a popular open-source virtualization technology that allows you to run virtual machines (VMs) on Linux systems. Thevirshcommand-line tool is used to manage KVM VMs. Let’s analyze each option:

A. virt-install

Incorrect:Thevirt-installcommand is used to create and provision new virtual machines. It is not used to list running VMs.

B. virsh net-list

Incorrect:Thevirsh net-listcommand lists virtual networks configured in the KVM environment. It does not display information about running VMs.

C. virsh list

Correct:Thevirsh listcommand displays the status of virtual machines managed by the KVM hypervisor. By default, it shows only running VMs. You can use the--allflag to include stopped VMs in the output.

D. VBoxManage list runningvms

Incorrect:TheVBoxManagecommand is used with Oracle VirtualBox, not KVM. It is unrelated to KVM virtualization.

Why virsh list?

Purpose-Built for KVM: virshis the standard tool for managing KVM virtual machines, andvirsh listis specifically designed to show the status of running VMs.

Simplicity:The command is straightforward and provides the required information without additional complexity.

JNCIA Cloud References:

The JNCIA-Cloud certification emphasizes understanding virtualization technologies, including KVM. Managing virtual machines using tools likevirshis a fundamental skill for operating virtualized environments.

For example, Juniper Contrail supports integration with KVM hypervisors, enabling the deployment and management of virtualized network functions (VNFs). Proficiency with KVM tools ensures efficient management of virtualized infrastructure.

Which two CPU flags indicate virtualization? (Choose two.)

lvm

vmx

xvm

kvm

Answer:

Explanation:

CPU flags indicate hardware support for specific features, including virtualization. Let’s analyze each option:

A. lvm

Incorrect: LVM (Logical Volume Manager) is a storage management technology used in Linux systems. It is unrelated to CPU virtualization.

B. vmx

Correct: The vmx flag indicates Intel Virtualization Technology (VT-x), which provides hardware-assisted virtualization capabilities. This feature is essential for running hypervisors like VMware ESXi, KVM, and Hyper-V.

C. xvm

Incorrect: xvm is not a recognized CPU flag for virtualization. It may be a misinterpretation or typo.

D. kvm

Correct: The kvm flag indicates Kernel-based Virtual Machine (KVM) support, which is a Linux kernel module that leverages hardware virtualization extensions (e.g., Intel VT-x orAMD-V) to run virtual machines. While kvm itself is not a CPU flag, it relies on hardware virtualization features like vmx (Intel) or svm (AMD).

Why These Answers?

Hardware Virtualization Support: Both vmx (Intel VT-x) and kvm (Linux virtualization) are directly related to CPU virtualization. These flags enable efficient execution of virtual machines by offloading tasks to the CPU.

JNCIA Cloud References:

The JNCIA-Cloud certification emphasizes understanding virtualization technologies, including hardware-assisted virtualization. Recognizing CPU flags like vmx and kvm is crucial for deploying and troubleshooting virtualized environments.

For example, Juniper Contrail integrates with hypervisors like KVM to manage virtualized workloads in cloud environments. Ensuring hardware virtualization support is a prerequisite for deploying such solutions.

What is the role of overlay tunnels in an overlay software-defined networking (SDN) solution?

The overlay tunnels provide optimization of traffic for performance and resilience.

The overlay tunnels provide load balancing and scale out for applications.

The overlay tunnels provide microsegmentation for workloads.

The overlay tunnels abstract the underlay network topology.

Answer:

Explanation:

In anoverlay software-defined networking (SDN)solution, overlay tunnels play a critical role in abstracting the underlying physical network (underlay) from the virtualized network (overlay). Let’s analyze each option:

A. The overlay tunnels provide optimization of traffic for performance and resilience.

Incorrect:While overlay tunnels can contribute to traffic optimization indirectly, their primary role is not performance or resilience. These aspects are typically handled by SDN controllers or other network optimization tools.

B. The overlay tunnels provide load balancing and scale out for applications.

Incorrect:Load balancing and scaling are functions of application-level services or SDN controllers, not the overlay tunnels themselves. Overlay tunnels focus on encapsulating traffic rather than managing application workloads.

C. The overlay tunnels provide microsegmentation for workloads.

Incorrect:Microsegmentation is achieved through policies and security rules applied at the overlay network level, not directly by the tunnels themselves. Overlay tunnels enable the transport of segmented traffic but do not enforce segmentation.

D. The overlay tunnels abstract the underlay network topology.

Correct:Overlay tunnels encapsulate traffic between endpoints (e.g., VMs, containers) and hide the complexity of the underlay network. This abstraction allows the overlay network to operate independently of the physical network topology, enabling flexibility and scalability.

Why This Answer?

Abstraction of Underlay:Overlay tunnels use encapsulation protocols like VXLAN, GRE, or MPLS to create virtualized networks that are decoupled from the physical infrastructure. Thisabstraction simplifies network management and enables advanced features like multi-tenancy and mobility.

JNCIA Cloud References:

The JNCIA-Cloud certification covers overlay and underlay networks as part of its SDN curriculum. Understanding the role of overlay tunnels is essential for designing and managing virtualized networks in cloud environments.

For example, Juniper Contrail uses overlay tunnels to provide connectivity between virtual machines (VMs) and containers, abstracting the physical network and enabling seamless communication across distributed environments.

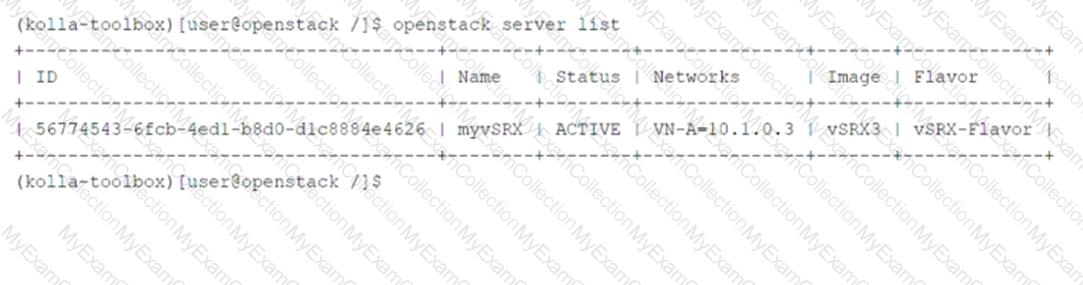

Click to the Exhibit button.

Referring to the exhibit, which two statements are correct? (Choose two.)

The myvSRX instance is using a default image.

The myvSRX instance is a part of a default network.

The myvSRX instance is created using a custom flavor.

The myvSRX instance is currently running.

Answer:

Explanation:

Theopenstack server listcommand provides information about virtual machine (VM) instances in the OpenStack environment. Let’s analyze the exhibit and each statement:

Key Information from the Exhibit:

The output shows details about themyvSRXinstance:

Status: ACTIVE(indicating the instance is running).

Networks: VN-A-10.1.0.3(indicating the instance is part of a specific network).

Image: vSRX3(indicating the instance was created using a custom image).

Flavor: vSRX-Flavor(indicating the instance was created using a custom flavor).

Option Analysis:

A. The myvSRX instance is using a default image.

Incorrect:The image namevSRX3suggests that this is a custom image, not the default image provided by OpenStack.

B. The myvSRX instance is a part of a default network.

Incorrect:The network nameVN-A-10.1.0.3indicates that the instance is part of a specific network, not the default network.

C. The myvSRX instance is created using a custom flavor.

Correct:The flavor namevSRX-Flavorindicates that the instance was created using a custom flavor, which defines the CPU, RAM, and disk space properties.

D. The myvSRX instance is currently running.

Correct:TheACTIVEstatus confirms that the instance is currently running.

Why These Statements?

Custom Flavor:ThevSRX-Flavorname clearly indicates that a custom flavor was used to define the instance's resource allocation.

Running Instance:TheACTIVEstatus confirms that the instance is operational and available for use.

JNCIA Cloud References:

The JNCIA-Cloud certification emphasizes understanding OpenStack commands and outputs, including theopenstack server listcommand. Recognizing how images, flavors, and statuses are represented is essential for managing VM instances effectively.

For example, Juniper Contrail integrates with OpenStack Nova to provide advanced networking features for VMs, ensuring seamless operation based on their configurations.

Which container runtime engine is used by default in OpenShift?

containerd

cri-o

Docker

runC

Answer:

Explanation:

OpenShift uses a container runtime engine to manage and run containers within its Kubernetes-based environment. Let’s analyze each option:

A. containerd

Incorrect:

Whilecontainerdis a popular container runtime used in Kubernetes environments, it is not the default runtime for OpenShift. OpenShift uses a runtime specifically optimized for Kubernetes workloads.

B. cri-o

Correct:

CRI-Ois the default container runtime engine for OpenShift. It is a lightweight, Kubernetes-native runtime that implements the Container Runtime Interface (CRI) and is optimized for running containers in Kubernetes environments.

C. Docker

Incorrect:

Docker was historically used as a container runtime in earlier versions of Kubernetes and OpenShift. However, OpenShift has transitioned to CRI-O as its default runtime, as Docker's architecture is not directly aligned with Kubernetes' requirements.

D. runC

Incorrect:

runCis a low-level container runtime that executes containers. While it is used internally by higher-level runtimes likecontainerdandcri-o, it is not used directly as the runtime engine in OpenShift.

Why CRI-O?

Kubernetes-Native Design:CRI-O is purpose-built for Kubernetes, ensuring compatibility and performance.

Lightweight and Secure:CRI-O provides a minimalistic runtime that focuses on running containers efficiently and securely.

JNCIA Cloud References:

The JNCIA-Cloud certification covers container runtimes as part of its curriculum on container orchestration platforms. Understanding the role of CRI-O in OpenShift is essential for managing containerized workloads effectively.

For example, Juniper Contrail integrates with OpenShift to provide advanced networking features, leveraging CRI-O for container execution.

What are two available installation methods for an OpenShift cluster? (Choose two.)

installer-provisioned infrastructure

kubeadm

user-provisioned infrastructure

kubespray

Answer:

Explanation:

OpenShift provides multiple methods for installing and deploying clusters, depending on the level of control and automation desired. Let’s analyze each option:

A. installer-provisioned infrastructure

Correct:

Installer-provisioned infrastructure (IPI)is an automated installation method where the OpenShift installer provisions and configures the underlying infrastructure (e.g., virtual machines, networking) using cloud provider APIs or bare-metal platforms. This method simplifies deployment by handling most of the setup automatically.

B. kubeadm

Incorrect:

kubeadmis a tool used to bootstrap Kubernetes clusters manually. While it is widely used for Kubernetes installations, it is not specific to OpenShift and is not an official installation method for OpenShift clusters.

C. user-provisioned infrastructure

Correct:

User-provisioned infrastructure (UPI)is a manual installation method where users prepare and configure the infrastructure (e.g., virtual machines, load balancers, DNS) before deploying OpenShift. This method provides greater flexibility and control over the environment but requires more effort from the user.

D. kubespray

Incorrect:

Kubesprayis an open-source tool used to deploy Kubernetes clusters on various infrastructures. Likekubeadm, it is not specific to OpenShift and is not an official installation method for OpenShift clusters.

Why These Methods?

Installer-Provisioned Infrastructure (IPI):Automates the entire installation process, making it ideal for users who want a quick and hassle-free deployment.

User-Provisioned Infrastructure (UPI):Allows advanced users to customize the infrastructure and tailor the deployment to their specific needs.

JNCIA Cloud References:

The JNCIA-Cloud certification covers OpenShift installation methods as part of its curriculum on container orchestration platforms. Understanding the differences between IPI and UPI is essential for deploying OpenShift clusters effectively.

For example, Juniper Contrail integrates with OpenShift to provide advanced networking features, regardless of whether the cluster is deployed using IPI or UPI.

You are asked to provision a bare-metal server using OpenStack.

Which service is required to satisfy this requirement?

Ironic

Zun

Trove

Magnum

Answer:

Explanation:

OpenStack is an open-source cloud computing platform that provides various services for managing compute, storage, and networking resources. To provision abare-metal serverin OpenStack, theIronicservice is required. Let’s analyze each option:

A. Ironic

Correct:OpenStack Ironic is a bare-metal provisioning service that allows you to manage and provision physical servers as if they were virtual machines. It automates tasks such as hardware discovery, configuration, and deployment of operating systems on bare-metal servers.

B. Zun

Incorrect:OpenStack Zun is a container service that manages the lifecycle of containers. It is unrelated to bare-metal provisioning.

C. Trove

Incorrect:OpenStack Trove is a Database as a Service (DBaaS) solution that provides managed database instances. It does not handle bare-metal provisioning.

D. Magnum

Incorrect:OpenStack Magnum is a container orchestration service that supports Kubernetes, Docker Swarm, and other container orchestration engines. It is focused on containerized workloads, not bare-metal servers.

Why Ironic?

Purpose-Built for Bare-Metal:Ironic is specifically designed to provision and manage bare-metal servers, making it the correct choice for this requirement.

Automation:Ironic automates the entire bare-metal provisioning process, including hardware discovery, configuration, and OS deployment.

JNCIA Cloud References:

The JNCIA-Cloud certification covers OpenStack as part of its cloud infrastructure curriculum. Understanding OpenStack services like Ironic is essential for managing bare-metal and virtualized environments in cloud deployments.

For example, Juniper Contrail integrates with OpenStack to provide networking and security for both virtualized and bare-metal workloads. Proficiency with OpenStack services ensures efficient management of diverse cloud resources.

Which OpenStack object enables multitenancy?

role

flavor

image

project

Answer:

Explanation:

Multitenancy is a key feature of OpenStack, enabling multiple users or organizations to share cloud resources while maintaining isolation. Let’s analyze each option:

A. role

Incorrect:Aroledefines permissions and access levels for users within a project. While roles are important for managing user privileges, they do not directly enable multitenancy.

B. flavor

Incorrect:Aflavorspecifies the compute, memory, and storage capacity of a VM instance. It is unrelated to enabling multitenancy.

C. image

Incorrect:Animageis a template used to create VM instances. While images are essential for deploying VMs, they do not enable multitenancy.

D. project

Correct:Aproject(also known as a tenant) is the primary mechanism for enabling multitenancy in OpenStack. Each project represents an isolated environment where resources (e.g., VMs, networks, storage) are provisioned and managed independently.

Why Project?

Isolation:Projects ensure that resources allocated to one tenant are isolated from others, enabling secure and efficient resource sharing.

Resource Management:Each project has its own quotas, users, and resources, making it the foundation of multitenancy in OpenStack.

JNCIA Cloud References:

The JNCIA-Cloud certification emphasizes understanding OpenStack’s multitenancy model, including the role of projects. Recognizing how projects enable resource isolation is essential for managing shared cloud environments.

For example, Juniper Contrail integrates with OpenStack Keystone to enforce multitenancy and network segmentation for projects.

You want to limit the memory, CPU, and network utilization of a set of processes running on a Linux host.

Which Linux feature would you configure in this scenario?

You want to limit the memory, CPU, and network utilization of a set of processes running on a Linux host.

Which Linux feature would you configure in this scenario?

virtual routing and forwarding instances

network namespaces

control groups

slicing

Answer:

Explanation:

Linux provides several features to manage system resources and isolate processes. Let’s analyze each option:

A. virtual routing and forwarding instances

Incorrect:Virtual Routing and Forwarding (VRF) is a networking feature used to create multiple routing tables on a single router or host. It is unrelated to limiting memory, CPU, or network utilization for processes.

B. network namespaces

Incorrect:Network namespaces are used to isolate network resources (e.g., interfaces, routing tables) for processes. While they can help with network isolation, they do not directly limit memory or CPU usage.

C. control groups

Correct: Control Groups (cgroups)are a Linux kernel feature that allows you to limit, account for, and isolate the resource usage (CPU, memory, disk I/O, network) of a set of processes. cgroups are commonly used in containerization technologies like Docker and Kubernetes to enforce resource limits.

D. slicing

Incorrect:"Slicing" is not a recognized Linux feature for resource management. This term may refer to dividing resources in other contexts but is not relevant here.

Why Control Groups?

Resource Management:cgroups provide fine-grained control over memory, CPU, and network utilization, ensuring that processes do not exceed their allocated resources.

Containerization Foundation:cgroups are a core technology behind container runtimes likecontainerdand orchestration platforms like Kubernetes.

JNCIA Cloud References:

The JNCIA-Cloud certification covers Linux features like cgroups as part of its containerization curriculum. Understanding cgroups is essential for managing resource allocation in cloud environments.

For example, Juniper Contrail integrates with Kubernetes to manage containerized workloads, leveraging cgroups to enforce resource limits.

What are two Kubernetes worker node components? (Choose two.)

kube-apiserver

kubelet

kube-scheduler

kube-proxy

Answer:

Explanation:

Kubernetes worker nodes are responsible for running containerized applications and managing the workloads assigned to them. Each worker node contains several key components that enable it to function within a Kubernetes cluster. Let’s analyze each option:

A. kube-apiserver

Incorrect: The kube-apiserver is a control plane component, not a worker node component. It serves as the front-end for the Kubernetes API, handling communication between the control plane and worker nodes.

B. kubelet

Correct: The kubelet is a critical worker node component. It ensures that containers are running in the desired state by interacting with the container runtime (e.g., containerd). It communicates with the control plane to receive instructions and report the status of pods.

C. kube-scheduler

Incorrect: The kube-scheduler is a control plane component responsible for assigning pods to worker nodes based on resource availability and other constraints. It does not run on worker nodes.

D. kube-proxy

Correct: The kube-proxy is another essential worker node component. It manages network communication for services and pods by implementing load balancing and routing rules. It ensures that traffic is correctly forwarded to the appropriate pods.

Why These Components?

kubelet: Ensures that containers are running as expected and maintains the desired state of pods.

kube-proxy: Handles networking and enables communication between services and pods within the cluster.

JNCIA Cloud References:

The JNCIA-Cloud certification covers Kubernetes architecture, including the roles of worker node components. Understanding the functions of kubelet and kube-proxy is crucial for managing Kubernetes clusters and troubleshooting issues.

For example, Juniper Contrail integrates with Kubernetes to provide advanced networking and security features. Proficiency with worker node components ensures efficient operation of containerized workloads.

Which two statements are correct about cloud computing? (Choose two.)

Cloud computing eliminates operating expenses.

Cloud computing has the ability to scale elastically

Cloud computing increases the physical control of the data resources.

Cloud computing allows access to data any time from any location through the Internet.

Answer:

Explanation:

Cloud computing is a model for delivering IT services where resources are provided over the internet on-demand. Let’s analyze each statement:

A. Cloud computing eliminates operating expenses.

Incorrect:While cloud computing can reduce certain operating expenses (e.g., hardware procurement, maintenance), it does not eliminate them entirely. Organizations still incur costs such as subscription fees, data transfer charges, and operational management of cloudresources. Additionally, there may be costs associated with training staff or migrating workloads to the cloud.

B. Cloud computing has the ability to scale elastically.

Correct:Elasticity is one of the key characteristics of cloud computing. It allows resources (e.g., compute, storage, networking) to scale up or down automatically based on demand. For example, during peak usage, additional virtual machines or storage can be provisioned dynamically, and when demand decreases, these resources can be scaled back. This ensures efficient resource utilization and cost optimization.

C. Cloud computing increases the physical control of the data resources.

Incorrect:Cloud computing typically reduces physical control over data resources because the infrastructure is managed by the cloud provider. For example, in public cloud models, the customer does not have direct access to the physical servers or data centers. Instead, they rely on the provider’s security and compliance measures.

D. Cloud computing allows access to data any time from any location through the Internet.

Correct:One of the core advantages of cloud computing is ubiquitous access. Users can access applications, services, and data from anywhere with an internet connection. This is particularly beneficial for remote work, collaboration, and global business operations.

JNCIA Cloud References:

The Juniper Networks Certified Associate - Cloud (JNCIA-Cloud) curriculum highlights the key characteristics of cloud computing, including elasticity, scalability, and ubiquitous access. These principles are foundational to understanding how cloud environments operate and how they differ from traditional on-premises solutions.

For example, Juniper Contrail, a software-defined networking (SDN) solution, leverages cloud elasticity to dynamically provision and manage network resources in response to changing demands. Similarly, the ability to access cloud resources remotely aligns with Juniper’s focus on enabling flexible and scalable cloud architectures.

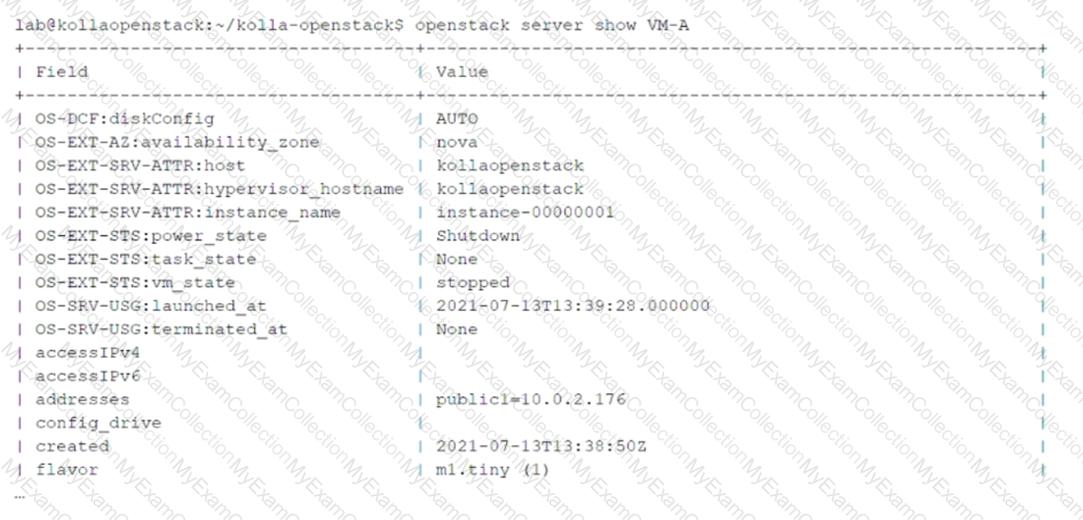

Click the Exhibit button.

You have issued theopenstack server show VM-Acommand and received the output shown in the exhibit.

To which virtual network is the VM-A instance attached?

m1.tiny

public1

Nova

kollaopenstack

Answer:

Explanation:

Theopenstack server showcommand provides detailed information about a specific virtual machine (VM) instance in OpenStack. The output includes details such as the instance name, network attachments, power state, and more. Let’s analyze the question and options:

Key Information from the Exhibit:

Theaddressesfield in the output shows

public1=10.0.2.176

This indicates that the VM-A instance is attached to the virtual network namedpublic1, with an assigned IP address of10.0.2.176.

Option Analysis:

A. m1.tiny

Incorrect: m1.tinyrefers to the flavor of the VM, which specifies the resource allocation (e.g., CPU, memory, disk). It is unrelated to the virtual network.

B. public1

Correct:Theaddressesfield explicitly states that the VM-A instance is attached to thepublic1virtual network.

C. Nova

Incorrect:Nova is the OpenStack compute service that manages VM instances. It is not a virtual network.

D. kollaopenstack

Incorrect: kollaopenstackappears in the output as the hostname or project name but does not represent a virtual network.

Why public1?

Network Attachment:Theaddressesfield in the output directly identifies the virtual network (public1) to which the VM-A instance is attached.

IP Address Assignment:The IP address (10.0.2.176) confirms that the VM is connected to thepublic1network.

JNCIA Cloud References:

The JNCIA-Cloud certification emphasizes understanding OpenStack commands and outputs, including theopenstack server showcommand. Recognizing how virtual networks are represented in OpenStack is essential for managing VM connectivity.

For example, Juniper Contrail integrates with OpenStack Neutron to provide advanced networking features for virtual networks likepublic1.