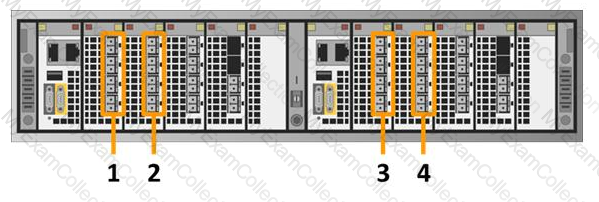

Which number in the exhibit highlights the Director-A back-end ports?

1

2

4

3

Answer:

Explanation:

The image provided appears to be a diagram or photograph of the back panel of a Dell VPLEX system. The back panel is divided into two sections, each presumably representing a director module. Each section has a set of ports highlighted and labeled with numbers 1, 2, 3, and 4. According to the question provided, which asks to identify the Director-A back-end ports from the options given (OA 1, OB 2, OC 4, OD 3), the verified answer is number 3. This can be inferred because typically in such systems, ‘A’ might refer to the first director or left side when looking at the back panel.

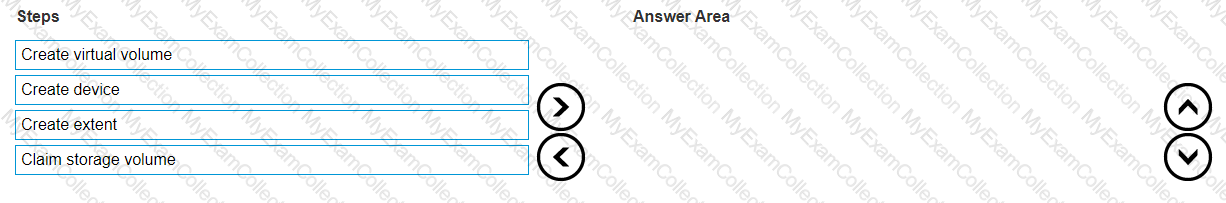

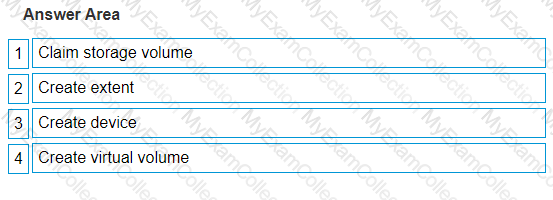

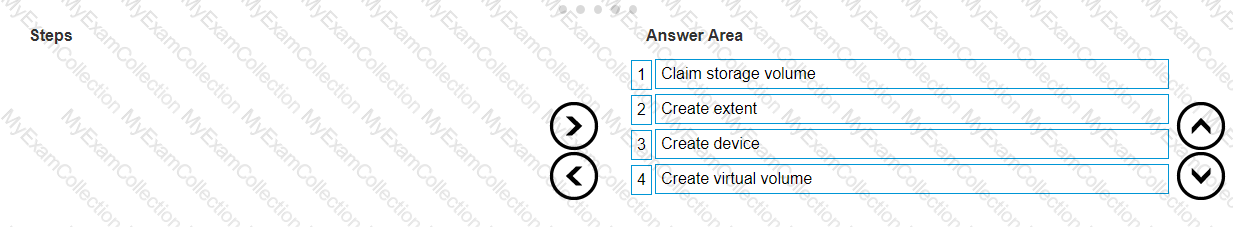

What is the correct order of steps to create a virtual volume?

Answer:

Explanation:

The correct order of steps to create a virtual volume is:

Claim storage volume

Create extent

Create device

Create virtual volume

Claim Storage Volume: The first step involves claiming a storage volume from the underlying storage array. This is where you identify and allocate the physical storage that will be used.

Create Extent: Once the storage volume is claimed, the next step is to create an extent. An extent is a specific range of block addresses within a storage volume.

Create Device: After creating an extent, you then create a device. A device in VPLEX terminology is a logical representation of storage that can be used by hosts.

Create Virtual Volume: The final step is to create a virtual volume from the device. A virtual volume is what is presented to hosts; it’s the logical unit that they will access for storage.

This process ensures that storage is properly allocated and managed within the VPLEX environment, allowing for flexibility and efficiency in storage utilization.

For detailed procedures and best practices, it’s recommended to consult the official Dell VPLEX documentation and training materials, which provide in-depth guidance on managing and configuring VPLEX systems.

Using the Storage Volume expansion method for virtual volumes built on RAID-1 or distributed RAID-1 devices, what is the maximum number of initialization processes that can

run concurrently, per cluster?

500

1000

100

250

Answer:

What is a VPLEX RAID-0 device?

Device that concatenates data on a top-level device

Extent that concatenates data on a top-level device

Extent that stripes data beneath a top-level device

Device that stripes data beneath a top-level device

Answer:

Explanation:

A VPLEX RAID-0 device is a configuration where data is striped across multiple storage volumes to improve performance. Here’s a detailed explanation:

RAID-0 Definition: RAID-0 is a disk array configuration that involves striping data across multiple disks without redundancy.This increases performance because I/O operations can be performed concurrently on all disks1.

Device Stripes Data: In the context of VPLEX, a device refers to a logical unit that is created from physical storage volumes.When a VPLEX device is configured as RAID-0, it stripes data across the underlying storage volumes1.

Top-Level Device: The term ‘top-level device’ refers to the logical device that is presented to hosts and applications.In VPLEX, this top-level device is the virtual volume that is created from one or more RAID-configured devices1.

Striping Beneath Top-Level Device: The striping occurs beneath the top-level device, meaning that it is handled within the VPLEX system and is transparent to the host.The host sees a single logical unit, while the VPLEX system manages the distribution of data across the physical volumes1.

Performance Considerations: RAID-0 devices provide better performance since data is retrieved from several storage volumes at the same time. However, RAID-0 devices do not include a mirror to provide data redundancy.They are used for non-critical data that requires high speed and low cost of implementation1.

By configuring a VPLEX device as RAID-0, administrators can leverage the performance benefits of striping for applications that require high throughput and do not need the redundancy provided by other RAID levels.

What is a consideration when using VPLEX RecoverPoint enabled consistency groups?

Production and local copy journals must be in different consistency groups.

Repository volume and journal volumes must be in different consistency groups.

Local virtual volumes and distributed virtual volumes can be in the same consistency group.

Local copy volumes and production volumes must reside in separate consistency groups.

Answer:

Explanation:

When using VPLEX with RecoverPoint enabled consistency groups, it’s important to consider how journals are managed:

Production Journals: These are used by the production volumes for logging write I/Os before they are replicated to the remote site or local copy.

Local Copy Journals: These are used by the local copy volumes for the same purpose as production journals but are specific to the local copies.

Separation of Journals: To ensure proper replication and recovery processes, production and local copy journals must be kept in separate consistency groups.This separation is crucial for maintaining the integrity of the replication and avoiding conflicts between production and local copy operations1.

RecoverPoint Configuration: In a VPLEX environment, RecoverPoint provides continuous data protection and replication.It is configured to work with VPLEX consistency groups to ensure that all writes are captured and can be recovered in case of a failure1.

Best Practices: Dell’s best practices for VPLEX RecoverPoint configurations recommend this separation of journals to ensure that the system can handle failover scenarios correctly and that data is not lost or corrupted1.

By following this consideration, storage administrators can ensure that their VPLEX RecoverPoint environment is configured for optimal data protection and disaster recovery readiness.

Which data mobility operation removes the pointer to the source leg of a RAID-1 device?

Remove

Start

Commit

Clean

Answer:

Explanation:

The data mobility operation that removes the pointer to the source leg of a RAID-1 device in Dell VPLEX is the “Clean†operation. This operation is part of the data mobility process in VPLEX, which involves migrating data from one storage volume to another.

Data Mobility: Data mobility in VPLEX allows for the non-disruptive movement of data between storage volumes, which is often used for technology refreshes, load balancing, or other maintenance activities1.

RAID-1 Device: A RAID-1 device in VPLEX is a virtual volume that provides data redundancy by mirroring data across two storage volumes, known as legs1.

Clean Operation: The “Clean†operation is used after the data has been successfully migrated to the new storage volume (target leg).It removes the pointer from the old storage volume (source leg), effectively completing the migration process1.

Pointer Removal: Removing the pointer to the source leg is an important step to ensure that the VPLEX system no longer references the old storage volume for read or write operations, and all I/O is directed to the new volume1.

Finalization: Once the “Clean†operation is performed, the source leg can be safely decommissioned or repurposed, as it is no longer part of the RAID-1 device configuration1.

By using the “Clean†operation, administrators can ensure that the data mobility process is completed efficiently and that the VPLEX system maintains data integrity and continuity of service.

When is expanding a virtual volume using the Storage Volume expansion method a valid option?

Virtual volume has minor problems, as reported by health-check

Virtual volume is mapped 1:1 to the underlying storage volume

Virtual volume is a metadata volume

Virtual volume previously expanded by adding extents or devices

Answer:

Explanation:

Expanding a virtual volume using the Storage Volume expansion method is a valid option when the virtual volume is mapped 1:1 to the underlying storage volume. This method is suitable when each virtual volume corresponds directly to a single storage volume on the backend array, and there is a need to expand the volume’s capacity.

1:1 Mapping: A 1:1 mapping means that there is a direct relationship between a virtual volume in VPLEX and a single storage volume on the backend storage array.This allows for a straightforward expansion process as any increase in the size of the backend volume can be reflected in the virtual volume1.

Storage Volume Expansion: The Storage Volume expansion method involves increasing the size of the backend storage volume first.This is typically done through the storage array’s management interface1.

VPLEX Recognition: Once the backend storage volume is expanded, VPLEX must recognize the new size.This may require rescanning the storage volumes within VPLEX to detect the changes1.

Virtual Volume Expansion: After VPLEX recognizes the increased size of the storage volume, the corresponding virtual volume can be expanded to utilize the additional capacity.This is done within the VPLEX management interface1.

Exclusion of Other Options: The other options listed, such as a virtual volume having minor problems, being a metadata volume, or previously expanded by adding extents or devices, are not typically associated with the Storage Volume expansion methD.These scenarios may require different approaches or may not be suitable for expansion using this method1.

By ensuring that the virtual volume is mapped 1:1 to the underlying storage volume, administrators can effectively utilize the Storage Volume expansion method to increase the capacity of virtual volumes in a VPLEX environment.

What are characteristics of a storage view?

An initiator can only be in multiple storage view

VPLEX FE port can be in multiple storage views

Each initiator and FE port pair must be in different storage views

An initiator can be in multiple storage views

VPLEX FE port can be in multiple storage views

Each initiator and FE port pair can only be in one storage view

An initiator can only be in one storage view

VPLEX FE port can be in multiple storage views

Each initiator and FE port pair can be in different storage views

An initiator can be in multiple storage views

VPLEX FE port can only be in one storage view

Each initiator and FE port pair can only be in one storage view

Answer:

Explanation:

A storage view in Dell VPLEX is a logical construct that defines the visibility and access relationships between hosts (initiators), storage (virtual volumes), and VPLEX front-end (FE) ports. Here’s a detailed explanation of the characteristics:

Initiator Multiplicity:An initiator, which is typically a host’s HBA (Host Bus Adapter) port, can indeed be part of multiple storage views. This allows a single host to access different sets of virtual volumes through different storage views.

VPLEX FE Port Multiplicity:VPLEX FE ports can also be included in multiple storage views. This design provides flexibility in connecting multiple hosts to various virtual volumes through shared FE ports.

Initiator and FE Port Pairing:While both initiators and FE ports can exist in multiple storage views, each unique initiator and FE port pair can only be part of one storage view. This rule ensures that the path from a host to a virtual volume through a specific FE port is uniquely defined, preventing any ambiguity in the data access path.

References:

The Dell VPLEX documentation outlines the concept of storage views and their characteristics, emphasizing the importance of properly configuring storage views to ensure correct visibility and access control between hosts and storage resources1.

Best practices guides and technical whitepapers provided by Dell further explain how to configure storage views in VPLEX, detailing the relationships between initiators, FE ports, and virtual volumes1.

By understanding these characteristics, a VPLEX storage administrator can effectively manage access to storage resources, ensuring that hosts have the necessary visibility to the virtual volumes they require for their operations.

VPLEX Metro has been added to an existing HP OpenView network monitoring environment. The VPLEX SNMP agent and other integration information have been added to

assist in the implementation. After VPLEX is added to SNMP monitoring, only the remote VPLEX cluster is reporting performance statistics.

What is the cause of this issue?

TCP Port 443 is blocked at the local site's firewall.

Local VPLEX Witness has a misconfigured SNMP agent.

HP OpenView is running SNMP version 2C, which may cause reporting that does not contain the performance statistics.

Local VPLEX cluster management server has a misconfigured SNMP agent.

Answer:

Explanation:

When only the remote VPLEX cluster is reporting performance statistics in an HP OpenView network monitoring environment, the cause is likely due to a misconfiguration of the SNMP agent on the local VPLEX cluster management server. Here’s a detailed explanation:

SNMP Agent Role: The SNMP (Simple Network Management Protocol) agent is responsible for collecting and sending performance statistics from the VPLEX cluster to the monitoring system1.

Misconfiguration Symptoms: If the SNMP agent is misconfigured on the local VPLEX cluster management server, it may fail to collect or send the correct performance data, resulting in missing statistics in the monitoring system1.

Troubleshooting Steps: To resolve this issue, the VPLEX administrator should verify the SNMP configuration on the local VPLEX cluster management server.This includes checking the community strings, SNMP version, and target IP addresses for the monitoring system1.

Correct Configuration: Ensuring that the SNMP agent is correctly configured on the local VPLEX cluster management server will allow it to report performance statistics accurately to the HP OpenView network monitoring environment1.

Monitoring Continuity: Proper configuration of the SNMP agent is crucial for continuous and comprehensive monitoring of the VPLEX environment, which is essential for maintaining system performance and availability1.

By addressing the misconfiguration of the SNMP agent on the local VPLEX cluster management server, the service provider can restore full monitoring capabilities and ensure that both local and remote VPLEX clusters report performance statistics as expected.

At which stage of configuring a virtual volume on VPLEX is the RAID level defined?

Claimed volume

Extent

Device

Storage volume

Answer:

Explanation:

The RAID level for a virtual volume on VPLEX is defined at the device stage. Here’s the explanation:

Claimed Volume: This is the initial stage where a physical volume from a storage array is claimed by VPLEX. At this point, no RAID configuration is applied.

Extent: After claiming the volume, VPLEX divides it into extents, which are logical subdivisions of the claimed volume. Extents still do not have RAID configurations.

Device: This is the stage where RAID is applied. A VPLEX device is created from one or more extents, and it is at this point that the RAID level is defined.The device can be configured with various RAID levels, depending on the desired performance and redundancy requirements1.

Storage Volume: The term ‘storage volume’ typically refers to the physical storage on the array before it is claimed by VPLEX. It does not have a RAID level associated with it until it is claimed and turned into a VPLEX device.

By defining the RAID level at the device stage, VPLEX allows for flexibility and resilience in how data is stored and protected across the storage infrastructure.

At which layer of the director IO stack are local and distributed mirroring managed?

Coherent Cache

Storage View

Storage Volume

Device Virtualization

Answer:

Explanation:

Local and distributed mirroring in a VPLEX environment are managed at the device virtualization layer of the director IO stack. Here’s the explanation:

Device Virtualization Layer: This layer is responsible for the creation and management of virtualized storage devices in VPLEX. It abstracts the physical storage and presents it as virtual volumes that can be accessed by hosts.

Mirroring Management: Both local and distributed mirroring are configured at the device level.Local mirroring involves creating RAID-1 devices within the same VPLEX cluster, while distributed mirroring involves creating distributed devices across two VPLEX clusters12.

Coherent Cache: The coherent cache layer is involved in maintaining cache coherency across the VPLEX clusters but does not manage the mirroring of devices.

Storage View: The storage view layer is where hosts are mapped to virtual volumes. It does not manage the mirroring of devices but rather the access to them.

Storage Volume: The storage volume layer represents the physical storage volumes from backend arrays before they are virtualized by VPLEX. It is not the layer where mirroring is managed.

By managing mirroring at the device virtualization layer, VPLEX ensures that data is protected and available across multiple storage systems, providing high availability and disaster recovery capabilities.

When using VPLEX Metro, what is the supported round trip time between clusters?

30 ms

20 ms

15 ms

5 ms

Answer:

Explanation:

When using VPLEX Metro, the supported round trip time (RTT) between clusters is 5 milliseconds (ms). This is the maximum latency that is supported to ensure proper synchronization and performance of the VPLEX Metro system.

VPLEX Metro: VPLEX Metro is a storage virtualization solution that allows for the creation of distributed virtual volumes across two geographically separated clusters1.

Round Trip Time (RTT): RTT is the time it takes for a signal to travel from one cluster to another and back again.It is a critical factor in the performance of distributed systems like VPLEX Metro1.

5 ms Limitation: The 5 ms RTT limitation is set to ensure that the clusters can maintain synchronization without significant performance degradation.Latencies higher than this can lead to issues with data consistency and application performance1.

Network Considerations: When planning a VPLEX Metro deployment, it is important to consider the network infrastructure and ensure that the RTT between clusters does not exceed the 5 ms threshold1.

Performance Impact: Adhering to the 5 ms RTT is crucial for maintaining the high availability and data mobility features of VPLEX Metro, as it affects the ability to perform real-time data mirroring and failover between clusters1.

By ensuring that the RTT between VPLEX Metro clusters does not exceed 5 ms, organizations can achieve the desired level of performance and reliability from their VPLEX Metro deployment.

What condition would prevent volume expansion?

Migration occurring on the volume

Volume not belonging to a consistency group

Metadata volume being backed up

Logging volume in re-synchronization state

Answer:

Explanation:

Volume expansion in Dell VPLEX is a process that allows for increasing the size of a virtual volume. However, certain conditions can prevent this operation from taking place:

Migration Occurring on the Volume: If there is an ongoing migration process involving the volume, it cannot be expanded until the migration is complete.This is because the volume’s data layout is being altered during migration, and any attempt to change its size could lead to data corruption or other issues1.

Consistency Group Membership: Whether or not a volume belongs to a consistency group does not directly prevent volume expansion. Consistency groups in VPLEX areused to ensure write-order fidelity across multiple volumes but do not restrict the expansion of individual volumes within the group.

Metadata Volume Backup: Backing up a metadata volume is a separate operation that does not interfere with the ability to expand a storage volume. Metadata backups are typically performed to preserve the configuration and state information of the VPLEX system.

Logging Volume Re-synchronization: While a logging volume in a re-synchronization state indicates that there is an ongoing process to align data across clusters or devices, it does not inherently prevent the expansion of a storage volume.

Therefore, the condition that would prevent volume expansion is when there is a migration occurring on the volume (OA).

What can be used to monitor VPLEX performance parameters?

EMCREPORTS utility

Unisphere Performance Monitor Dashboard

VPLEX Cluster Witness

EMC SolVe Desktop

Answer:

Explanation:

The Unisphere Performance Monitor Dashboard is used to monitor VPLEX performance parameters. This dashboard is part of the Unisphere for VPLEX management suite and provides a graphical interface for monitoring various performance metrics.

Unisphere for VPLEX: Unisphere for VPLEX is the management interface for VPLEX systems.It provides administrators with tools to configure, manage, and monitor VPLEX environments1.

Performance Monitoring: The Performance Monitor Dashboard within Unisphere allows administrators to view real-time and historical performance data.This includes metrics such as I/O rates, latency, and throughput1.

Dashboard Features: The dashboard offers various features such as performance charts, threshold alerts, and detailed reports that help in identifying performance trends and potential issues1.

Accessibility: The dashboard is accessible through the Unisphere web interface, making it convenient for administrators to monitor the VPLEX system from any location1.

Usage: To use the Performance Monitor Dashboard, administrators log into Unisphere for VPLEX, navigate to the performance section, and can then view and analyze the performance data presented in the dashboard1.

By using the Unisphere Performance Monitor Dashboard, administrators can effectively monitor VPLEX performance parameters, ensuring the system operates efficiently and meets performance requirements.

Which type of mobility is used to move data to a remote cluster in a VPLEX Metro?

Device

Virtual volume

MetroPoint

Extent

Answer:

Explanation:

In a VPLEX Metro environment, the type of mobility used to move data to a remote cluster is known as device mobility. This process involves the migration of virtual volumes that are backed by VPLEX devices across the two clusters that make up the VPLEX Metro.

Here’s a detailed explanation:

Device Mobility: Device mobility refers to the capability of VPLEX to move a device, which is a logical representation of storage, from one cluster to another within a VPLEX Metro configuration1.

VPLEX Metro: VPLEX Metro is a configuration that allows for synchronous data replication and accessibility between two geographically separated clusters.It provides continuous availability and non-disruptive data mobility1.

Migration Process: The migration of data in a VPLEX Metro involves several steps, starting with the creation of a mobility job, followed by the actual data movement, and finally, the cleanup and completion of the job1.

CLI and GUI Tools: While earlier versions of VPLEX required the use of the Command Line Interface (CLI) for mobility in a Metro configuration, newer versions support this functionality through the Graphical User Interface (GUI) as well2.

Use Cases: Device mobility is often used for load balancing, tech refreshes, or other scenarios where data needs to be moved between clusters without disrupting access to the data1.

By utilizing device mobility, VPLEX Metro allows for the seamless movement of data across clusters, ensuring high availability and flexibility in data management.