You have a Kafka client application that has real-time processing requirements.

Which Kafka metric should you monitor?

This schema excerpt is an example of which schema format?

package com.mycorp.mynamespace;

message SampleRecord {

int32 Stock = 1;

double Price = 2;

string Product_Name = 3;

}

Which partition assignment minimizes partition movements between two assignments?

You have a consumer group with default configuration settings reading messages from your Kafka cluster.

You need to optimize throughput so the consumer group processes more messages in the same amount of time.

Which change should you make?

What are three built-in abstractions in the Kafka Streams DSL?

(Select three.)

Which configuration allows more time for the consumer poll to process records?

You need to set alerts on key broker metrics to trigger notifications when the cluster is unhealthy.

Which are three minimum broker metrics to monitor?

(Select three.)

You need to collect logs from a host and write them to a Kafka topic named 'logs-topic'. You decide to use Kafka Connect File Source connector for this task.

What is the preferred deployment mode for this connector?

You are sending messages to a Kafka cluster in JSON format and want to add more information related to each message:

Format of the message payload

Message creation time

A globally unique identifier that allows the message to be traced through the systemWhere should this additional information be set?

Your company has three Kafka clusters: Development, Testing, and Production.

The Production cluster is running out of storage, so you add a new node.

Which two statements about the new node are true?

(Select two.)

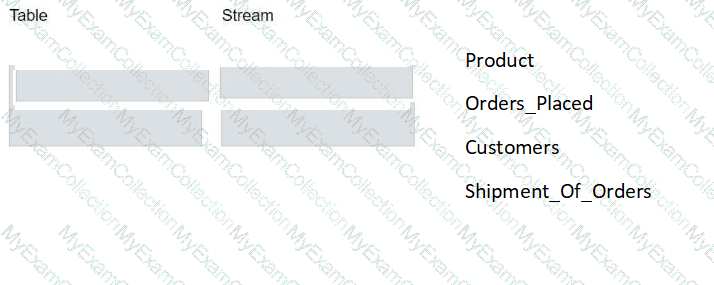

You are creating a Kafka Streams application to process retail data.

Match the input data streams with the appropriate Kafka Streams object.

Which tool can you use to modify the replication factor of an existing topic?

You need to consume messages from Kafka using the command-line interface (CLI).

Which command should you use?

What is the default maximum size of a message the Apache Kafka broker can accept?